Introduction

Ocellus - The next generation vision system for robotics and machines

Ocellus, the all-in-one package for industrial automation and IoT. It comes with a cutting-edge vision system that combines the power of AI and traditional vision. With support for a wide range of cameras, including area scan cameras, 3D structured light cameras, Time-of-Flight cameras, Laser profilers, Line cameras, Hyperspectral cameras, webcams and surveillance cameras, Ocellus is the perfect tool for controlling conveyors, lights, sensors, robots and collecting data for analytics.

Ocellus has a programming tool that allows users to wire together hardware devices, APIs, robots, and services in new and innovative ways. Its many image processing modules make it the ideal choice for anyone looking to streamline their manufacturing processes and optimize their operations.

With its plugin framework for custom modules and cloud-based AI platform for training and tuning Neural Network models, Ocellus is a highly adaptable and customizable solution that can be tailored to meet the specific needs.

Ocellus also comes with a powerful dashboard for monitoring and visualization, providing users with real-time insights into their manufacturing processes. It can be easily integrated with existing ERP systems, Power BI, or databases, making it the ideal choice for anyone looking to optimize their operations.

We are committed to providing our customers with the highest level of service and support. We offer SLAs for online and offline solutions with monitoring, backup, surveillance, and security, ensuring that your operations are always running smoothly and efficiently. Whether you're looking to improve your manufacturing processes, automate your operations, or gain greater insight into your operations, Ocellus is the ultimate tool for industrial automation.

Highlights:

- Easy to Use GUI Interface

- Many Image Processing Modules

- Camera Agnostic 2D/3D, Laser profilers, Surveillance, Line Cameras etc.

- API Available

- Framework for Custom Modules

- Visual Programming

- Artificial Intelligence

- Tracker Support

- Intrinsics and Camera Callibration

- Physics Engine

- Pose Estimation of any Object

- Supports many Industrial Protocols

- Robotics Support

- Dashboard for Analytics

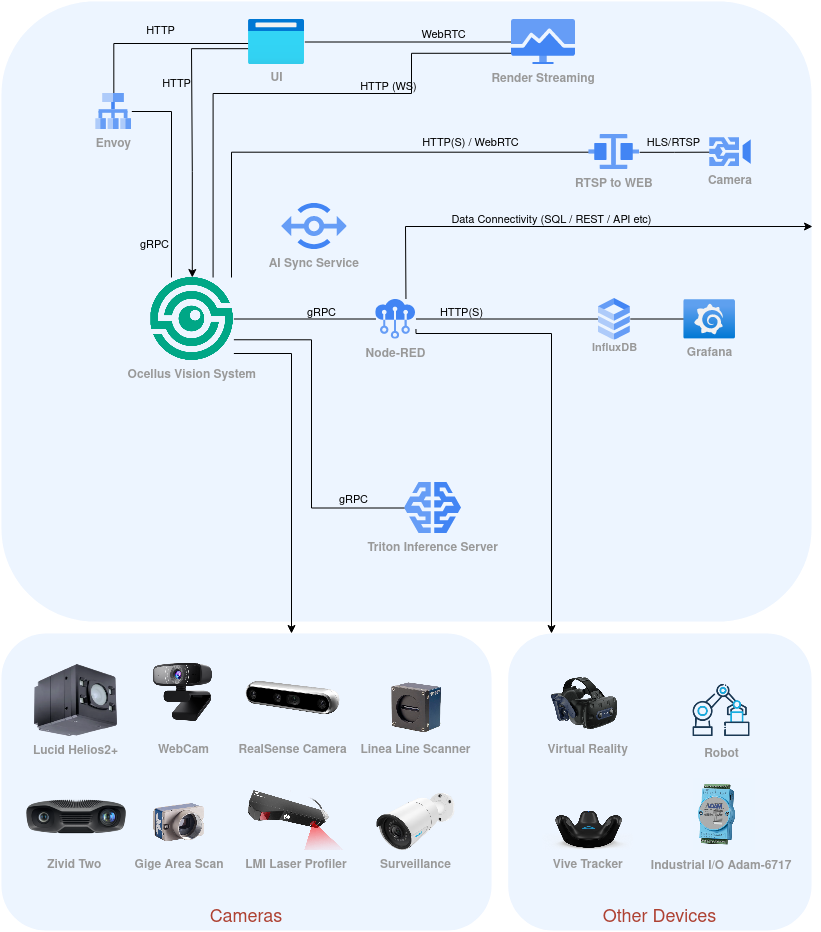

Ocellus System Overview

Ocellus Vision System

Ocellus is an intelligent vision system that leverages advanced technologies like artificial intelligence, machine learning, and computer vision to enable machines or robots to "see" and interpret visual data, enabling them to interact with the world in a more sophisticated manner, coordinate the manufacturing process, and provide real-time insight.

Envoy

Service proxy that is used to offload Web UI traffic. It act as a gateway between the client's browser and the vision system transcoding JSON and gRPC.

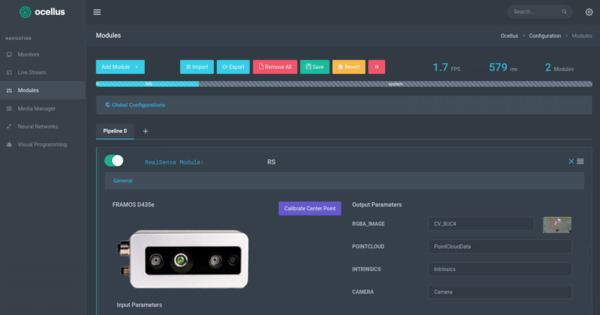

UI

To customize the detection and recognition capabilities of the vision system, users can utilize the web UI, accessible through a web browser. This interface allows them to configure modules, adjust camera parameters, and create custom image processing pipelines. They can capture screenshots and record videos for later analysis or AI training as well. They can observe the performance of each pipeline in real-time and make adjustments to ensure the system is operating at its optimal performance.

Render Streaming

Render Streaming is a solution that provides high quality rendering abilities via browser. This solution's streaming technology takes advantage of WebRTC and it allows the user to view Ocellus vision system application.

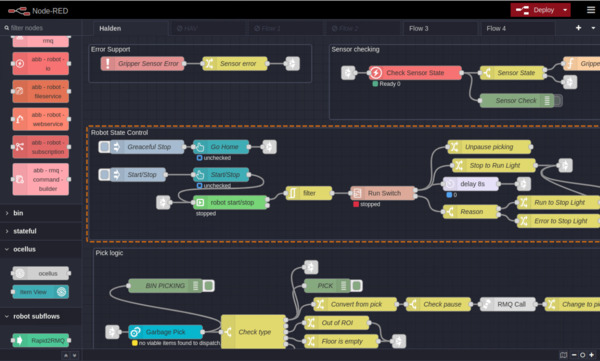

Node-RED

Node-RED is a flow-based programming tool that is used to create custom applications and automate workflows. It provides a visual programming interface that allows users to create complex workflows by connecting pre-built nodes together. These nodes can be used to interface with various devices, sensors, and systems within an industrial environment, such as programmable logic controllers (PLCs), sensors, and databases etc.

Triton Inference Server

AI inference server designed to provide high-performance inference for deep learning models. It leverages NVIDIA's optimized TensorRT library to accelerate inference on GPUs, delivering fast and efficient model execution.

Data Connectivity

Ocellus can connect with various data sources and report data into any database or ERP system. This connectivity allows for seamless integration with existing manufacturing systems, enabling efficient data transfer and streamlined data management. Ocellus can report data in real-time, providing real-time information about production processes and enabling timely decision-making.

Grafana

Grafana is a powerful monitoring and visualization tool that can be used to track and analyze data from manufacturing processes. It enables users to quickly and easily create dashboards that provide insights into production performance, costs, and quality. It can also be used to monitor and alert on problems such as machine downtime, excessive energy usage, and environmental conditions. Grafana's dashboards are highly customizable and can be used to visualize data from a variety of sources, including databases, IoT sensors, and other applications. This makes it an ideal tool for manufacturers who need to track and analyze data in order to optimize their operations.

InfluxDB

InfluxDB is an optimized database system designed for storing, querying, and analyzing time series data, which makes it ideal for handling high write and query loads in large-scale applications. Ocellus utilizes InfluxDB to store system metrics, sensor data, and application performance metrics that can be visualized in e.g: Grafana.

AI Sync Service

An application that ensures that your Neural Network models and Ocellus are up-to-date. This application is designed to streamline the process of keeping your Neural Network models in sync with the latest changes to your Neural Network models.

RTSPtoWEB

A RTSPtoWEB is a service that can convert video streams in real-time from RTSP (Real Time Streaming Protocol) to a format suitable for web playback.

Ocellus Vision System Modules

Ocellus has various algorithms and modules to analyze and interpret visual information. These modules play a crucial role in enhancing the system's performance and enabling it to detect, track, and classify objects within a scene accurately. Here we will list some features and benefits of each module, as well as their potential applications in different industries.

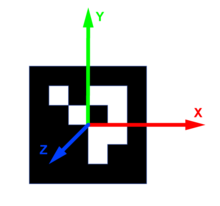

ArUco Module

The ArUco module is a feature of our vision system that allows for accurate and reliable detection and tracking of planar objects, such as markers or tags, in real-time. ArUco markers are 2D barcodes that are designed to be easily recognizable by a camera, making them an ideal tool for visual tracking applications.

Using the ArUco module, our vision system can accurately detect and track these markers, even in complex and cluttered environments. The module is capable of identifying multiple markers simultaneously.

Blob Module

Detect objects of interest in an image by analyzing the color or brightness of the pixels in an image, the module can identify regions that are likely to correspond to objects of interest, such as products on a conveyor belt or defects in a manufacturing process.

The module can be configured to detect objects of different sizes and shapes, and can be customized to work with different lighting conditions and backgrounds. This makes it ideal for a wide range of industrial and commercial applications, such as quality control and inspection.

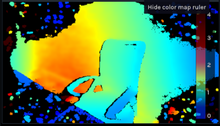

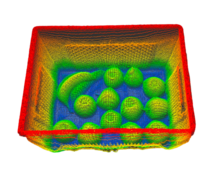

Colorized Depth Module

The Colorizing Depth module takes a point cloud and converts it into a colored image, which can be easier to process and analyze. Allowing for more intuitive interpretation and segmentation of objects in the image.

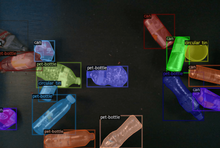

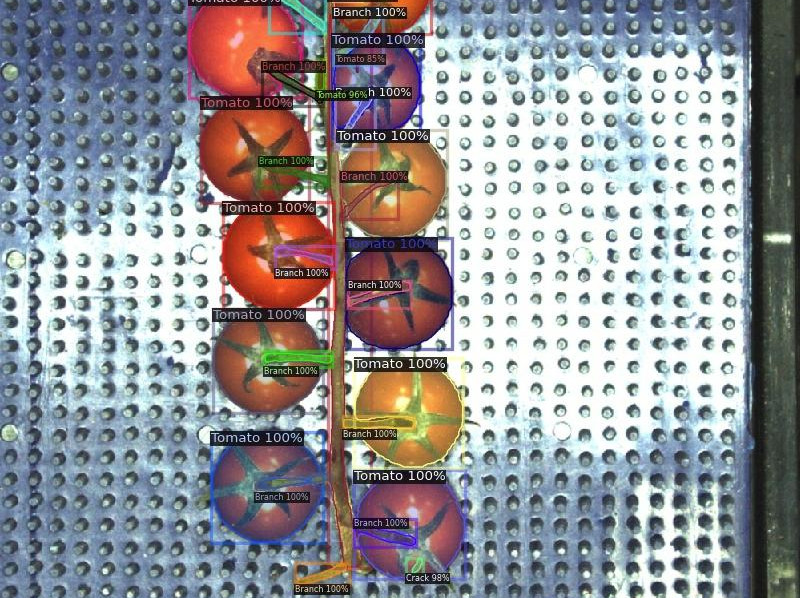

DNN Module

Our vision system includes an AI-based feature that utilizes Mask R-CNN (Region-based Convolutional Neural Network) to detect and track objects. Mask R-CNN is a deep learning-based object detection algorithm that can accurately detect and classify objects within an image while also generating a pixel-level segmentation mask for each object.

By utilizing Mask R-CNN, Ocellus can accurately detect and track objects in real-time, even in complex and cluttered environments. The algorithm is capable of accurately identifying objects of different sizes, shapes, and orientations, making it a powerful tool for a wide range of applications.

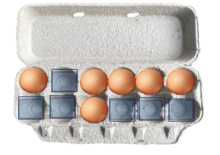

Grid Module

The grid module divides a region of interest into multiple smaller cells with a defined size. The module then analyzes each cell and determines whether or not it can accommodate an object of a specific size and shape. By doing this, the system can accurately detect the optimal placement of objects within the region, taking into account the dimensions and shapes of the objects and maximizing the use of the available space.

Object Fence Module

The object fence module is particularly for applications such as pallet inspection. This module enables the system to accurately track objects and create object fences around them.

Object fences are virtual barriers that define the boundaries of an object, allowing the vision system to track its movement accurately. In a pallet inspection application, the object fence module can be used to create a virtual fence around a pallet or groups of items on a pallet. This enables the vision system to accurately determine the position and rotation of the pallet and ensure that the items are correctly placed on the pallet.

Point Cloud Module

Manipulating and filtering point clouds. It can be used with a wide range of sensors, such as lidar and stereo cameras, and can process data from multiple sources simultaneously, allowing manipulating and filtering to remove noise and other unwanted artifacts before further processing.

Remote Module

3:rd party integration into Ocellus core module system. See: Module Service API

Vive Tracker Module

![]()

Applications that require accurate and reliable tracking of objects in a 3D space. he Vive Tracker is a small, wireless device that can be attached to a variety of objects, such as tools, equipment, robots and even people, and allows Ocellus to track their movements and positions in real-time.

One of the key benefits of the Vive Tracker module is its high accuracy and precision. The module uses advanced sensors and algorithms to track the position and orientation of objects in a 3D space with sub-millimeter accuracy. It is also highly customizable and can be programmed to perform a wide range of tasks. For example, it can be used to trigger events or actions based on the position or movement of objects, or to provide feedback to users based on their movements or interactions with the environment.

API Interfaces

- Web Service Swagger

- Lightweight TCP/IP ASCII API

- Data Flow Service API via gRPC: ocellus_module_service.proto and ocellus_types.proto

- Module Service API

Supported Cameras

Ocellus is capable of supporting a diverse array of cameras, regardless of brand or model. Most cameras are supported by Ocellus using Common Vision Blox (CVB), which is compatible with numerous standard and specialized hardware. This includes all GigE Vision and USB3 Vision GeniCam compliant cameras from a variety of manufacturers.

Furthermore, Ocellus is capable of supporting additional cameras and sensors beyond those compatible with CVB. Here is a list of several other camera types that Ocellus support:

| Camera | Description | Product Link |

|---|---|---|

|

Area scan cameras provide high-resolution images and can be used to detect and inspect features on products with a high degree of accuracy. Additionally, they can be used in challenging environments and offer fast image acquisition, making them suitable for use in high-speed production lines or other fast-paced applications. | Area Scan Cameras |

|

Time-of-Flight (ToF) cameras offer a fast and accurate depth measurement in real-time. ToF cameras also have a greater depth range than structured light cameras and are less sensitive to external light sources. | Lucid 3D cameras |

|

3D structured light cameras are designed to provide higher accuracy and faster performance than other 3D cameras. It has a 3D structured light sensor that captures images in vivid color and with a high level of detail. | Zivid Two 3D camera |

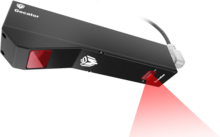

|

Laser Profiler is ideal for several applications including precision measurement for a variety of materials, such as wood, plastics, textiles, metals, and more. It is also used for quality control in many industries. It can be used to measure the shape, size, and thickness of parts, as well as identifying defects. | Gocator laser 3D profilers |

|

Line scanner camera is ideal for high-speed, high-resolution line scan applications. It is designed for industrial imaging applications capturing images of objects moving along a conveyor belt such as detecting defects, measuring dimensions, color sorting and recognizing patterns. | Line Scanners |

|

Ocellus supports any PC compatible webcam. Webcams are affordable, easy to install, and have a wide range of features. They can be used to monitor production lines to detect and alert workers of potential issues, as well as to automate processes. Additionally, webcams can be used in quality assurance and for tracking, tracing products, provide detailed analysis to improve efficiency and quality. | |

|

Outdoor surveillance cameras are designed to operate in all weather conditions. They can also be equipped with night vision that can help you monitor your factory more effectively. Furthermore, outdoor cameras are more durable than webcams and can withstand harsher environmental conditions, such as rain, snow, and extreme temperatures. | ReoLink |

|

Hyperspectral captures images in many narrow, contiguous spectral bands across the electromagnetic spectrum, including the visible, near-infrared, and shortwave infrared regions. The camera can capture a large number of spectral bands, typically hundreds or even thousands, with each band containing information about the reflectance or emission properties of the materials. It can distinguish between materials that look similar in visible light, such as plastics and seeds, by detecting their unique spectral fingerprints. | Specim FX Series |

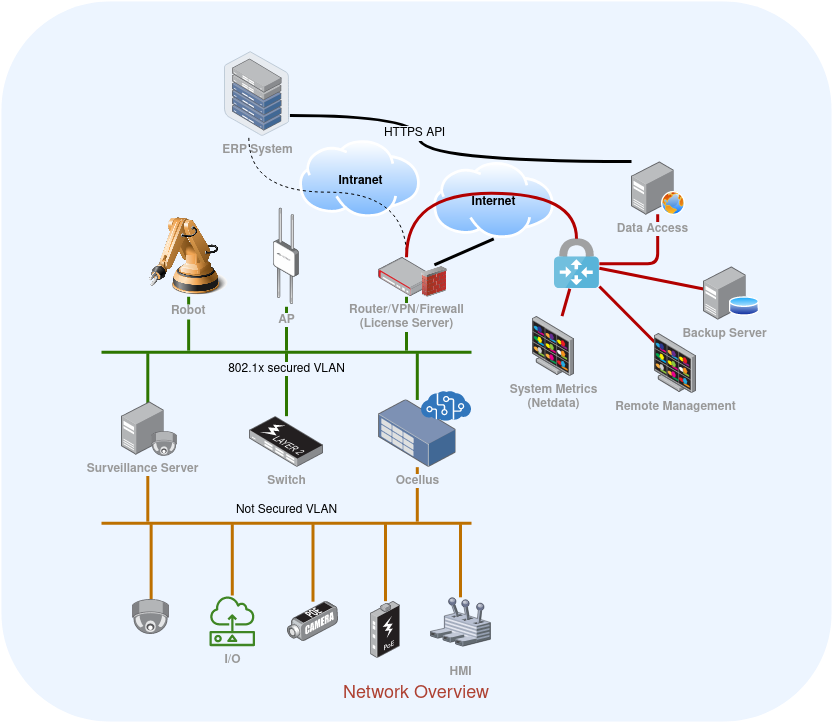

Network Overview

Ocellus AI Cloud

Ocellus AI cloud web portal is a powerful tool for businesses and organizations that require advanced image processing and analysis capabilities. This platform enables users to upload images from production environments and use them to train custom Neural Network models for various applications.

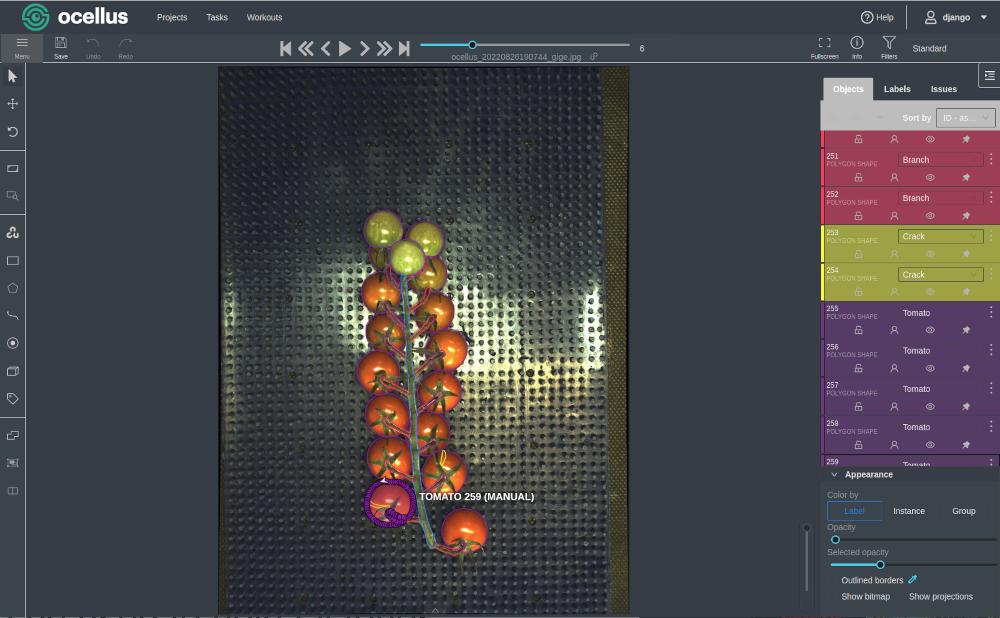

Annotation tool

Ocellus AI cloud web portal provide a user-friendly interface for annotating images. Users can easily add labels, tags, and other metadata to images, which can then be used to train machine learning algorithms. This process is essential for building accurate and robust Neural Network models that can perform specific tasks, such as object detection, classification, and segmentation.

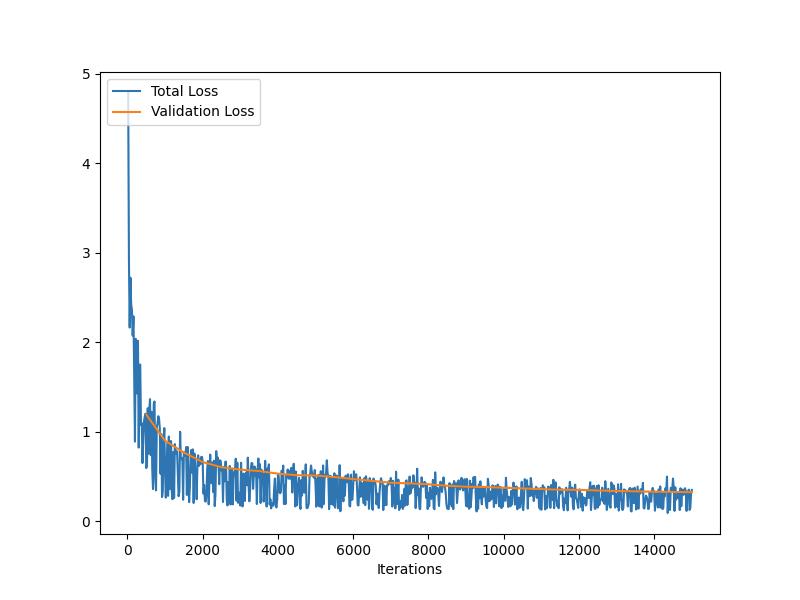

Once the images have been annotated, the AI cloud web portal can be used to train and refine Neural Network models. Users can choose from a variety of machine learning algorithms, image augmentations and other parameters, and can monitor the progress of the training process in real-time. The portal also provides tools for evaluating the performance of the Neural Network models, which helps users optimize the models for specific applications.

Ocellus AI Cloud is hosted at ai.ocellus.io.

|

|

| Model validation example | Model training progress |